Predictability and its dangers

An introduction to information entropy

We’re not just a math business; we’re a math technology business. So on top of having an awesome team of mathematicians, we also have an incredible group of engineers working here. Mansour is our Lead Infrastructure Engineer. That basically means he is responsible for ensuring that Mathspace stays healthy and happy.

Some of Mansour’s unpredictable traits include his hair color, his coffee preferences and his music taste. You could even say he is someone with high entropy… Read on to understand what I’m talking about!

Coffee of choice?

I’m all over the place with my coffee. At the moment I’m having almond cappuccinos. But before that I was getting my coffee from 7/11. Instant decaf finds its way into my cup here and there too!

Favorite mathematical field of study?

Entropy. Specifically entropy in the context of Information Theory (as opposed to Thermodynamics). It’s not really a field in mathematics, but it does involve a fair bit of math.

Put simply, entropy is a measure of unpredictability.

Can you give us an example of entropy?

I’ve got just the thing! Imagine we’re talking on the phone and I’m trying to get you to draw this diagram precisely:

I’d say something like:

“It’s a 4 by 4 square grid with 1 cm block size, but draw only a dot where the lines intersect, including corners and T sections.”

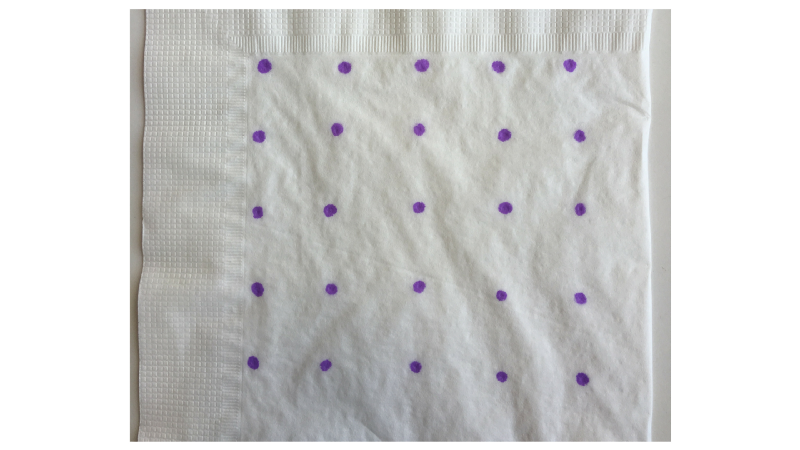

Now what if I were to describe this diagram (below) to you? It has the exact same number of dots, but describing it would not be as easy!

Remember, I want you to draw exactly what I see, not just something that looks like it. I could tell you to draw a dot…

Move your pen 3.42 cm right, and 1.23 cm down and draw another dot, then move 0.2 cm up, 4 cm right and draw another dot...

There are 25 dots, so you can imagine how long this description would take.

Why is it that we can describe the first diagram with a single sentence, while the second one would take minutes to describe, even though they both have the same number of dots? It’s because of how much information each diagram contains and the location of the dots is that information.

The dots in the first diagram are arranged such that one can derive location of subsequent ones, by knowing the location of the first. Simply add 1 cm in each direction. However, the location of the dots in the second diagram seem to follow no such pattern. They look randomly placed. Or alternatively, their location is unpredictable. We can say the second diagram has high entropy and the first diagram has low entropy.

You might have noticed that nothing would stop you from describing the first diagram dot by dot, like we did with the second. If we did, first diagram would still be low entropy. Entropy is not about a certain presentation of data, like our description over the phone, but rather the information density of that data, the inherent fact that the location of dots are predictable. So why is it that our description of the first diagram is much shorter than that of the second. The answer is “compression”.

Thanks to our rich natural language, as well as our knowledge of mathematics and logic, we have compressed the information in the first diagram into a few words. What’s more, those few words themselves have high entropy! Because they contain the same amount of information as is in the diagram, but in a single sentence, not a page full of dots!

Now, what if I told you that you could draw the second diagram precisely given a simple formula and the numbers 1 to 25 to substitute in it?

Woah, now the second diagram is suddenly low entropy as well because we can compress it down to a sentence. How can it be high and low entropy at the same time? The answer is that it was low entropy all along.

The lesson here is that humans are terrible at determining entropy. That’s why we have mathematical formulas to calculate it.

Why is entropy important?

Entropy is one of the underpinning ideas of the Information Theory, which itself is the basis of storage, communication and processing in all of computing today — making things like mobile phones and the Internet possible.

Entropy is also what makes effective cryptography possible. In short, cryptography is the study of methods of communication, namely encryption, with another party in a private and secure way so that others can’t ‘snoop’.

It is perhaps the most effective tool for protecting our privacy today.

Encryption is what protects us from online criminals, as well as companies and governments. These are people and groups who have, and always will, try to extend their reach into our private lives.

For example, one way an effective encryption helps protect our privacy, is by increasing the entropy, or the randomness of a message. So much that it becomes nearly impossible to find patterns in it, and to subsequently decode and read it. This same property of an encrypted message, its high entropy, makes it indistinguishable from noise which allows someone to deny having encrypted data at all. This is called “Plausible Deniability”.

Can you tell us about the history of information entropy?

Mathematician Claude E. Shannon pretty much established the field of Information Theory by publishing “A Mathematical Theory of Communication” article in 1948.

Information Entropy’s namesake is a similar concept in statistical mechanics where entropy can be understood as a measure of disorder of gas molecules in a room, for instance. The more neatly arranged the molecules are, the lower entropy configuration they have and vice-versa.

Shannon initially used the term “uncertainty” until John von Neumann suggested “Entropy” noting that the same concept and similar formula had been used by physicist already and “more [importantly], nobody [knew] what entropy really [was], so in a debate [he would] always have the advantage”.

Much of the development of Information Theory since Shannon’s paper has been around data compression and error correcting codes. Entropy is quite tightly related to compression. Put simply, the higher the entropy of some data, the harder it is to squeeze pack it.

Why are you so interested in information entropy?

I first learnt about entropy while studying computer science at university.

Back then I didn’t really pick up on its profound reach. It wasn’t until after my studies that I came across methods of finding “interesting” data by solely looking at its entropy. I love data and if you ask anyone who does, you’ll find that data is abundant, and most of it is just noise. Filtering out noise is always a challenge and the measure of entropy is a powerful tool to weed out the noise.

A while back my colleague Andy did this Coffee + Math interview, and she explored the beauty of when completely independent fields of mathematics, are suddenly found to be related by an equation.

The incredible thing about entropy is that it connects seemingly unrelated disciplines — mathematics and natural language.

Mathematics is derived from pure logic and reasoning, whereas natural language evolved organically through usage. The entropy of a piece of text can tell you the information density (or put more simply, the amount of “fluff” in the text). It can tell you if it’s a scientific paper or a novel. It might even tell you if it’s written by a particular author. All of that, from a single number that’s mathematically calculated from individual letters of that text!

Pretty cool, huh?

Anything else interesting to add?

Music lyrics are actually really interesting when you think about them in the context of entropy.

I really like songs with dense lyrics. So I decided to go about finding such songs. One approach was to go through a set and eyeball which ones qualify. Not very practical though, given how many songs are out there. So as any good computer programmer would do, I wrote a program that would calculate the entropy of a given lyrics. I then ran it across a (not so comprehensive) selection of 200K song lyrics and sorted them by highest to lowest entropy.

These were the top 5 dense lyrics (of 2K letters or more):

- Prologue by Frank Zappa

- That Evil Prince, by Frank Zappa

- N Fronta Ya’ Mama House, by Keak Da Sneak

- Leisureforce, by Aesop Rock

- 40 Acres, by Common Market

and these were the top 5 sparse lyrics:

- Wire Lurker, by D-Mode-D

- Nom Nom Nom Nom Nom Nom Nom, by Parry Gripp (my favorite)

- Honey, by Moby

- Take Me Away, by Eric Prydz

- Gangster Tripping, by Fatboy Slim

Despite my love of dense lyrics, I’m much more likely to listen to ‘Honey’ than ‘Prologue’. I can only speculate that something like taste, is far more overpowering than simple measures such as entropy.